Hardware Setup

Software Setup

Operating System

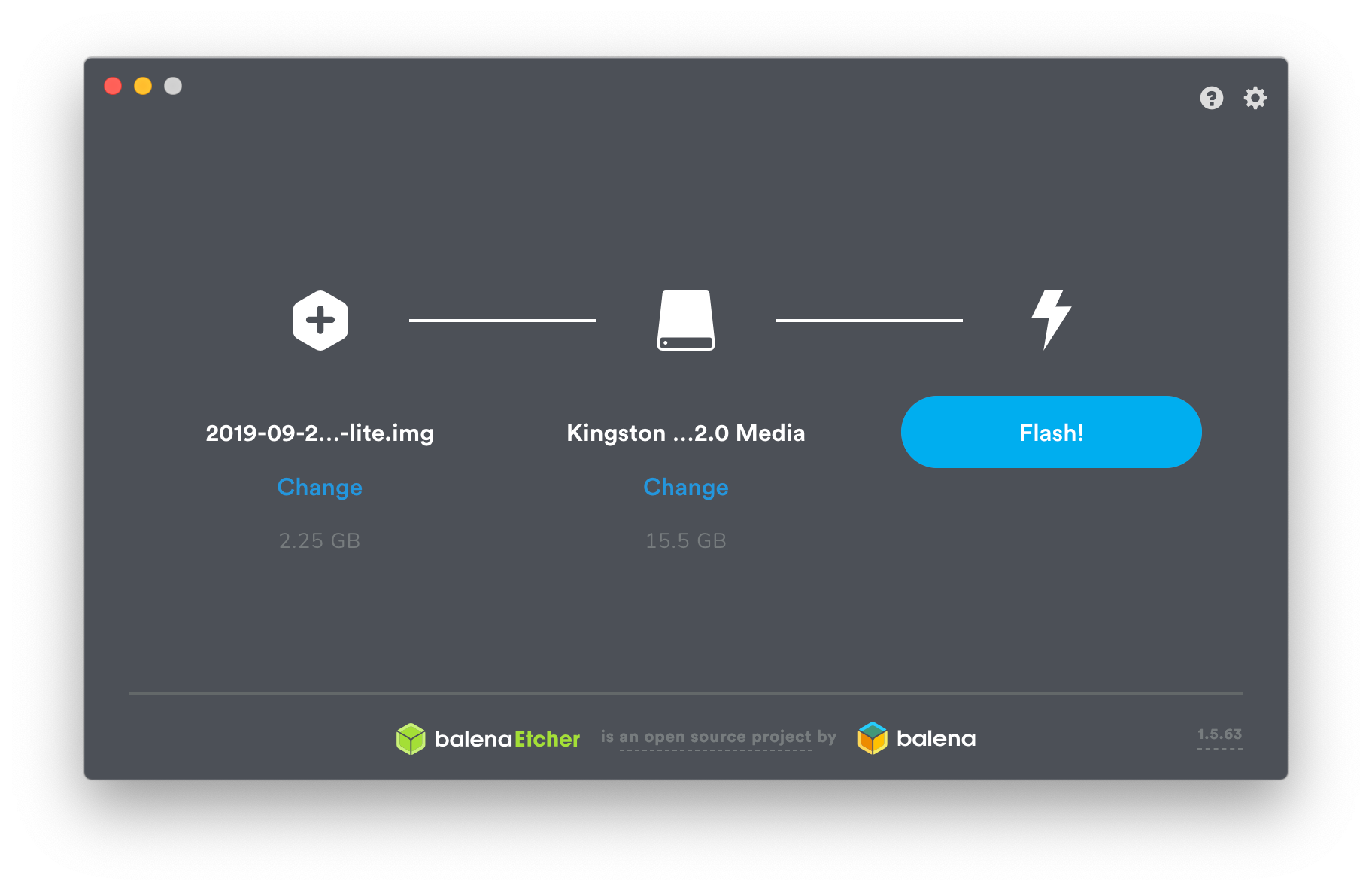

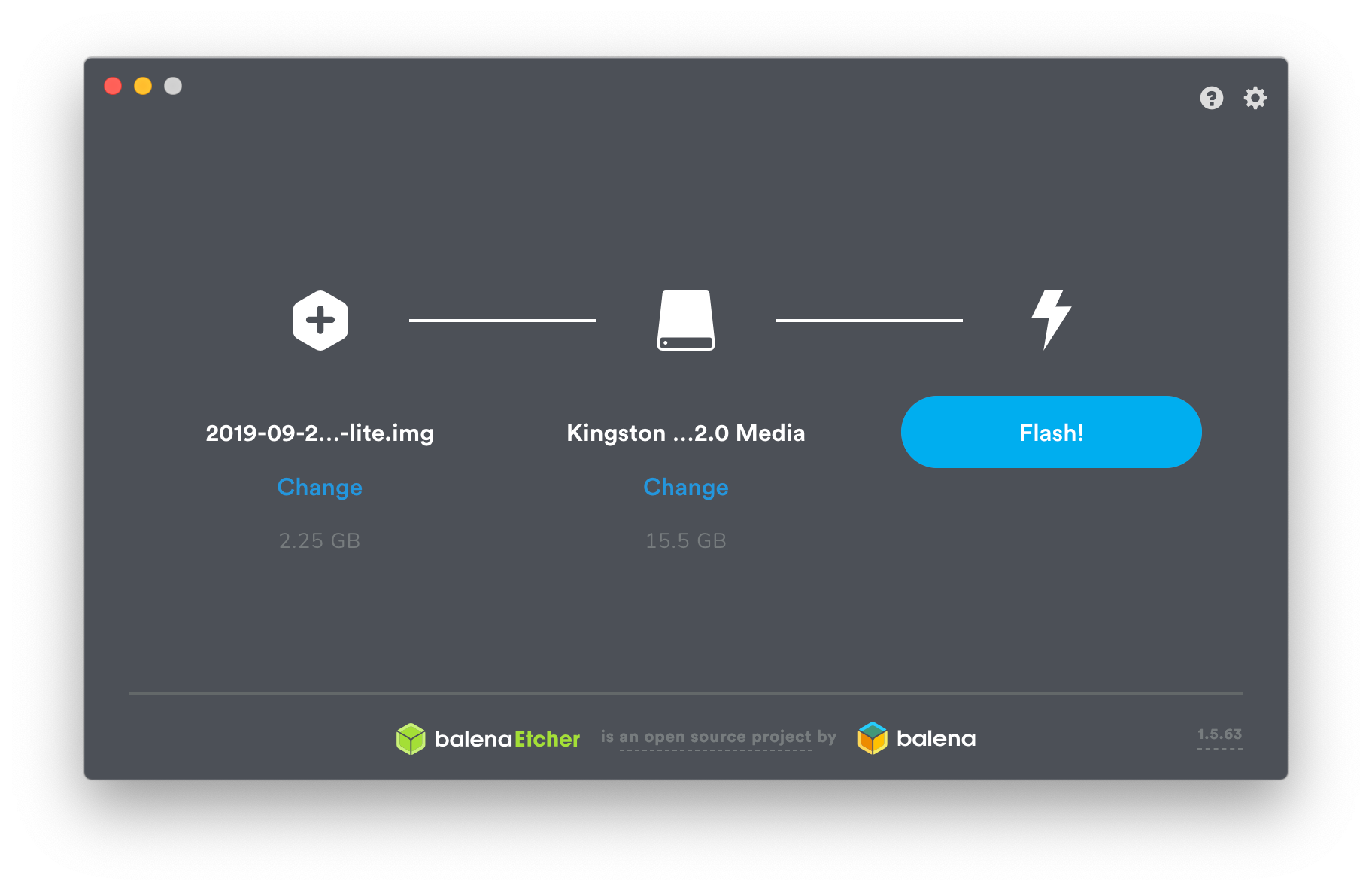

Flash SD Card

Copy SSH Key

~

✔ ssh-copy-id -i ~/.ssh/id_rsa pi@raspberrypi.bierochs.org

Adjust Rpi Operating System

- Change password

- Static IP address

- Enable container features

- Change Hostname

- Update Operating System

- Reboot

pi@raspberrypi:~ $ passwd

...

pi@raspberrypi:~ $ sudo tee -a << EOF /etc/dhcpcd.conf > /dev/null

interface eth0

static ip_address=192.168.1.33/24

static routers=192.168.1.1

static domain_name_servers=192.168.1.71

EOF

pi@raspberrypi:~ $ sudo vi /boot/cmdline.txt

cgroup_enable=cpuset cgroup_memory=1 cgroup_enable=memory

pi@raspberrypi:~ $ sudo raspi-config

2 Netwaork Options -> N1 Hostname

...

pi@ribbon:~ $ sudo apt update && sudo apt full-upgrade

pi@ribbon:~ $ sudo systemctl

Create the k3s cluster

Bootstrap the k3s server

pi@ribbon:~ $ curl -sfL https://get.k3s.io | sh -

...

pi@ribbon:~ $ sudo systemctl status k3s

...

pi@ribbon:~ $ sudo cat /var/lib/rancher/k3s/server/node-token

K1024ae544d0e6e5333d20c58dc2b28e5dbdbc96327144e1bc85691f3c75c692b9a::server:de691268c3a6fea976138de6add741b0

Join a worker

- rider.bierochs.org

- ringo.bierochs.org

- riviera.bierochs.org

pi@rider:~ $ export K3S_URL="https://ribbon.bierochs.org:6443"

pi@rider:~ $ export K3S_TOKEN="K1024ae544d0e6e5333d20c58dc2b28e5dbdbc96327144e1bc85691f3c75c692b9a::server:de691268c3a6fea976138de6add741b0"

pi@rider:~ $ curl -sfL https://get.k3s.io | sh -

...

pi@rider:~ $ sudo systemctl status k3s-agent

...

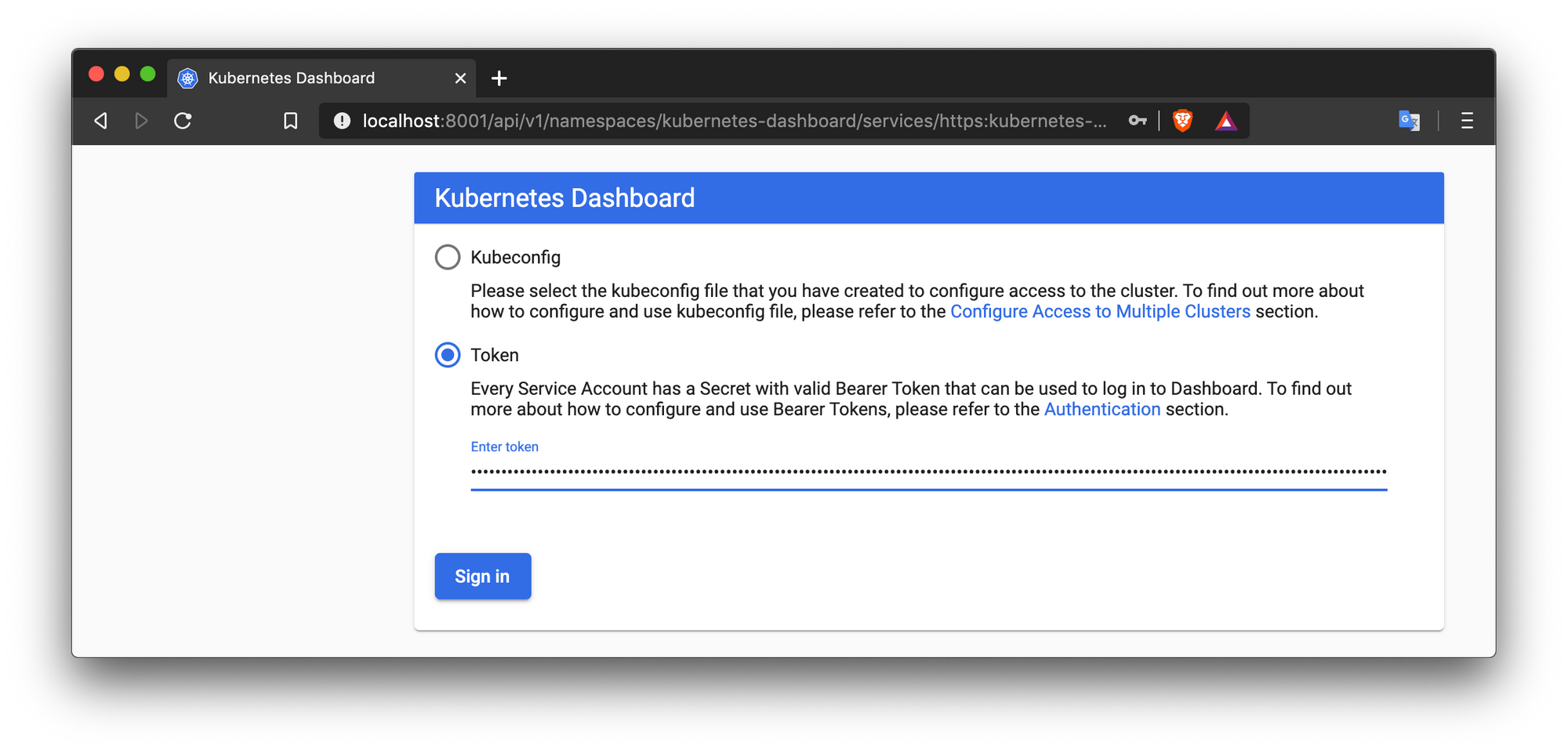

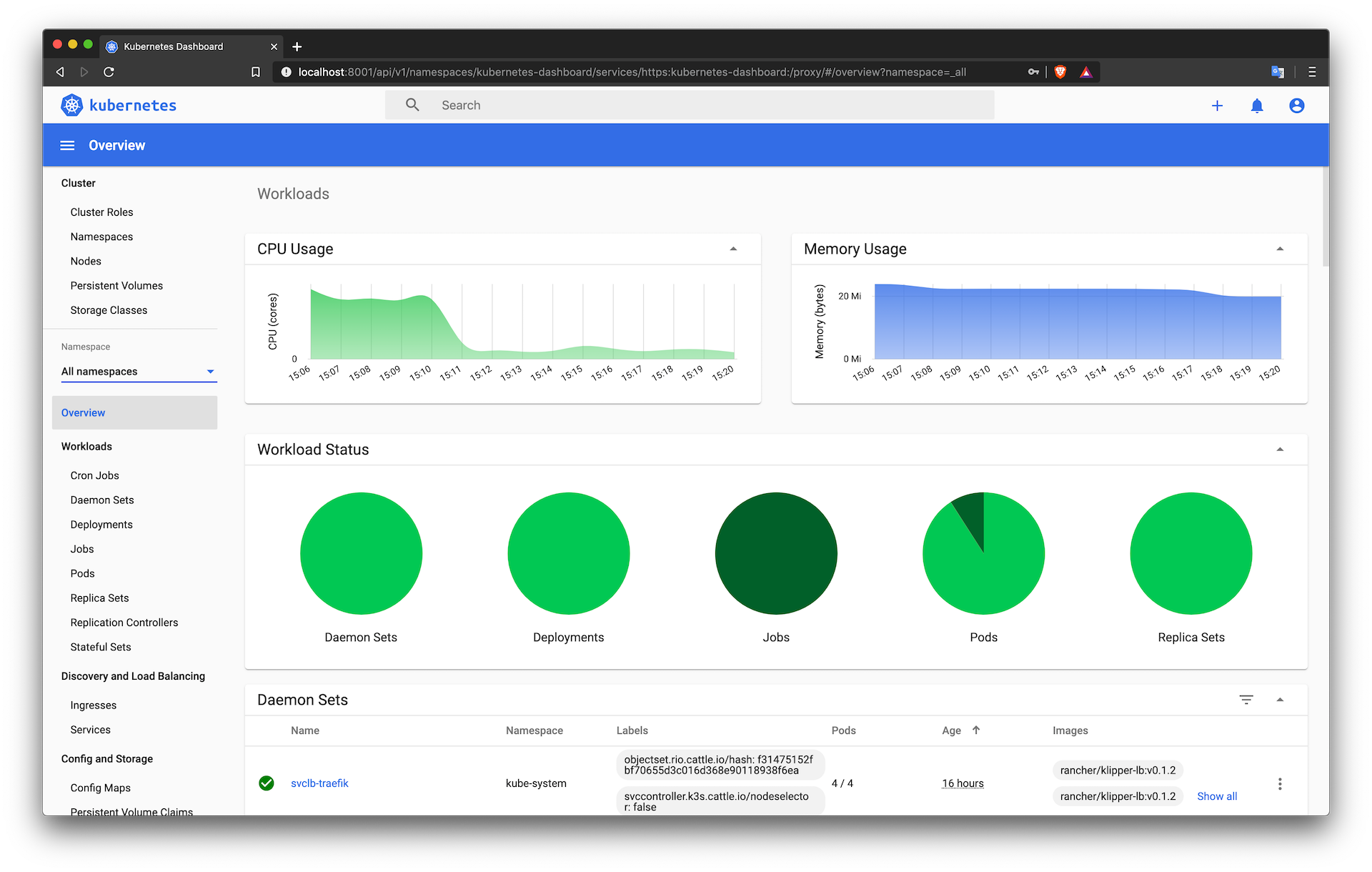

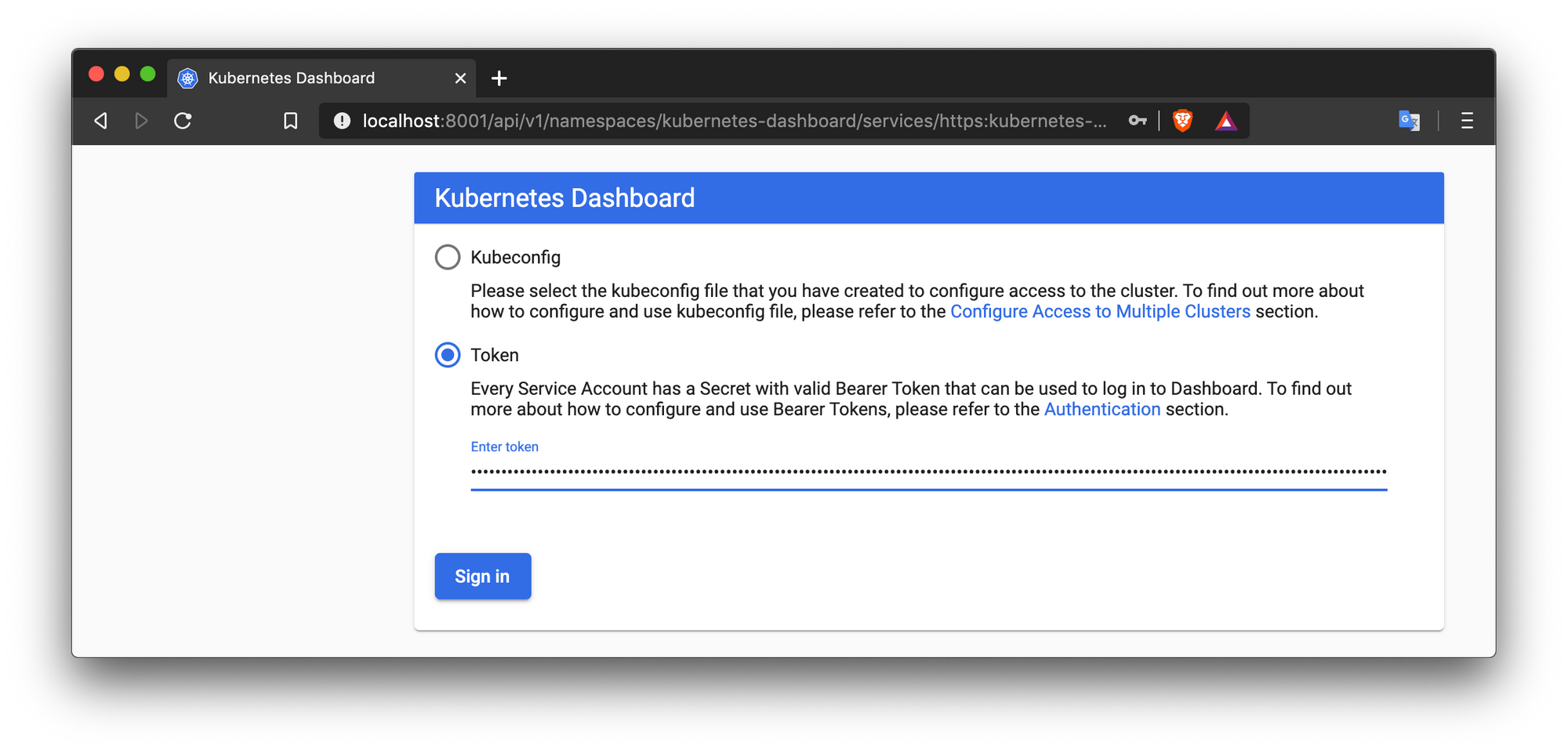

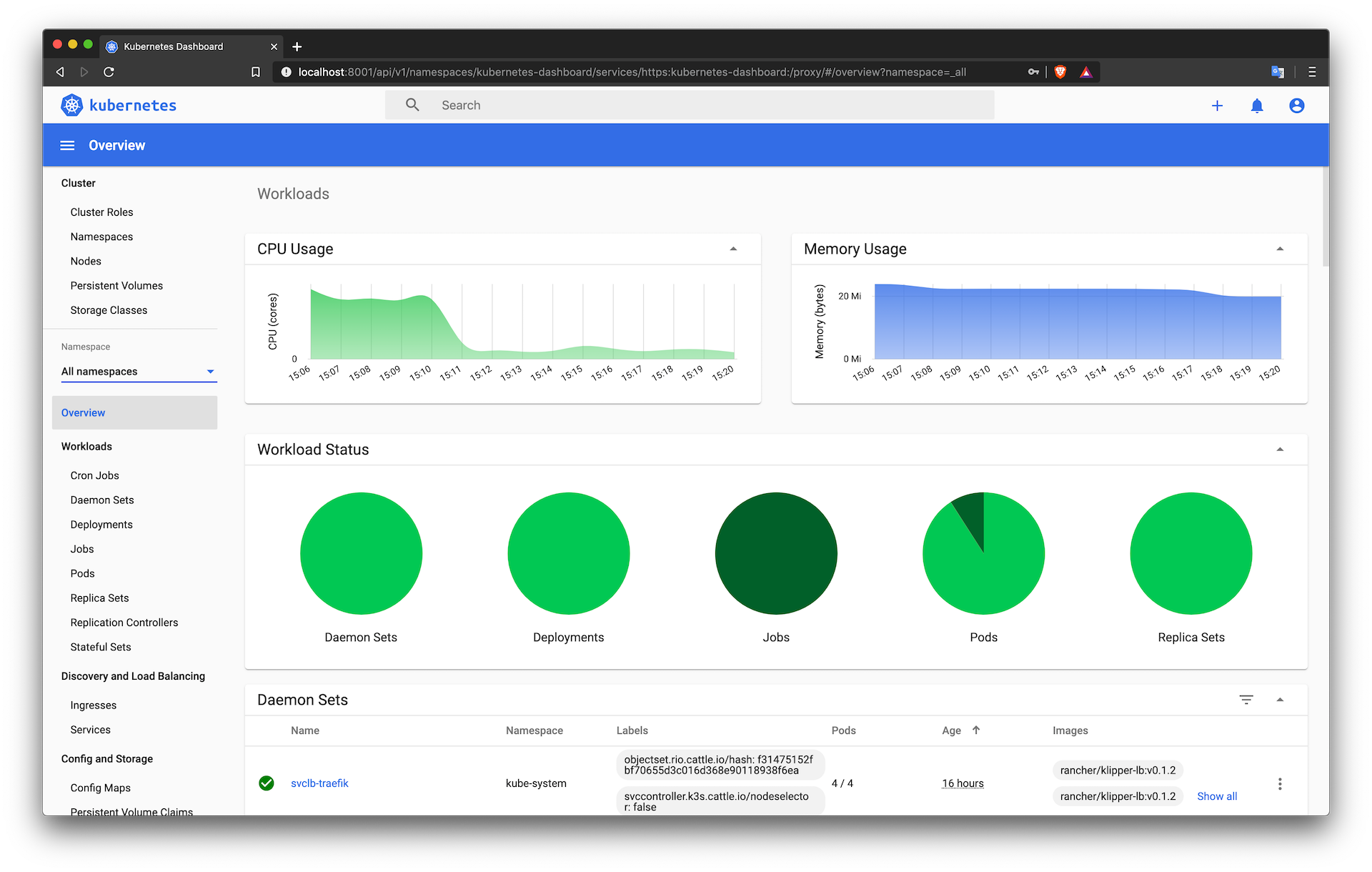

Web UI (Dashboard)

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJWakNCL3FBREFnRUNBZ0VBTUFvR0NDcUdTTTQ5QkFNQ01DTXhJVEFmQmdOVkJBTU1HR3N6Y3kxelpYSjIKWlhJdFkyRkFNVFUzT0RBd01ESXlNekFlRncweU1EQXhNREl5TVRJek5ETmFGdzB5T1RFeU16QXlNVEl6TkROYQpNQ014SVRBZkJnTlZCQU1NR0dzemN5MXpaWEoyWlhJdFkyRkFNVFUzT0RBd01ESXlNekJaTUJNR0J5cUdTTTQ5CkFnRUdDQ3FHU000OUF3RUhBMElBQkZIai8waG4vUWZleW5zMWppcUJVQjdQUjV6NnZsamVNK3lBRGhzOGJ2UlUKU0I1dEF5elhFNHYzUUFKODZSTFVqVzRNei9HelhmMnBEenZZYnp5NTV1Q2pJekFoTUE0R0ExVWREd0VCL3dRRQpBd0lDcERBUEJnTlZIUk1CQWY4RUJUQURBUUgvTUFvR0NDcUdTTTQ5QkFNQ0EwY0FNRVFDSURJOXhadVo3R2tHCkQ2aFhyR1lEVi8xYkJjd2ZsMU8wTEhqMWZ6L0VrQzgyQWlBVklVOEthVEVNQU5MWm9YRFFKVE1JcDB3a0VEWHEKRzhWSUVlNHF4NXVsL1E9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://ribbon.bierochs.org:6443

name: rpi

- context:

cluster: rpi

user: rpi

name: rpi

current-context: default

kind: Config

preferences: {}

users:

- name: rpi

user:

password: dc10b870bd8413b13116c047f2677c81

username: admin

~

✔ kubectl config current-context

~

✔ kubectl config use-context rpi

~

✔ kubectl delete ns kubernetes-dashboard

Error from server (NotFound): namespaces "kubernetes-dashboard" not found

~

✔ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta8/aio/deploy/recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard unchanged

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

~

✔ kubectl get namespaces --show-labels

NAME STATUS AGE LABELS

default Active 16h <none>

kube-system Active 16h <none>

kube-public Active 16h <none>

kube-node-lease Active 16h <none>

kubernetes-dashboard Active 16s <none>

~

✔ kubectl proxy

Starting to serve on 127.0.0.1:8001

~

✔ tee -a << EOF dashboard-adminuser.yaml > /dev/null

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

EOF

~

✔ kubectl apply -f dashboard-adminuser.yaml

serviceaccount/admin-user created

~

✔ tee -a << EOF dashboard-adminuser-crb.yaml > /dev/null

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

~

✔ kubectl apply -f dashboard-adminuser-crb.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

~

✔ kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-9st4t

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: fce0eedf-0e57-4d49-aac8-efe663308f85

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 526 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InJtR0UzT0JfMVR0TGpfVTVqUWI1RjVycGdsMDlhLTM4UEVRekZuWUU4ZUUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLTlzdDR0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJmY2UwZWVkZi0wZTU3LTRkNDktYWFjOC1lZmU2NjMzMDhmODUiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.QR_1l4w2VZaxkPjy9edZz-wAJyQrSZfd04XcUVOZ42KsfNcpdw2c_PyAndGy2WQavM2Rcz1BrIm2cdRDm8iyHoXWurnDVXi-HzBYmlvFOCMER8FEP10w_Nr-SkmJG_bwp7GFHOC0mYZxW-zs1PWGUsHYt8cgSJEeq_nzebyDclss_zlTbaKfZFN3LKJsdzpzPJFYm42W9zAKKk8xnCOHMRw6vOd6JdWVJWPFuDRYIsB1aR55JpXWd5O-k7igckJMO-pG2Y77t__ujm2okt--uniZZfHVkRt0yfF0C1kAuHw2vEwY97aoRCcpXtxDcfU7yCosi09fMxb4dBCBir5fRQ

Playing Around

Setting the Proper Context

~

✔ kubectl config current-context

~

✔ kubectl config use-context rpi

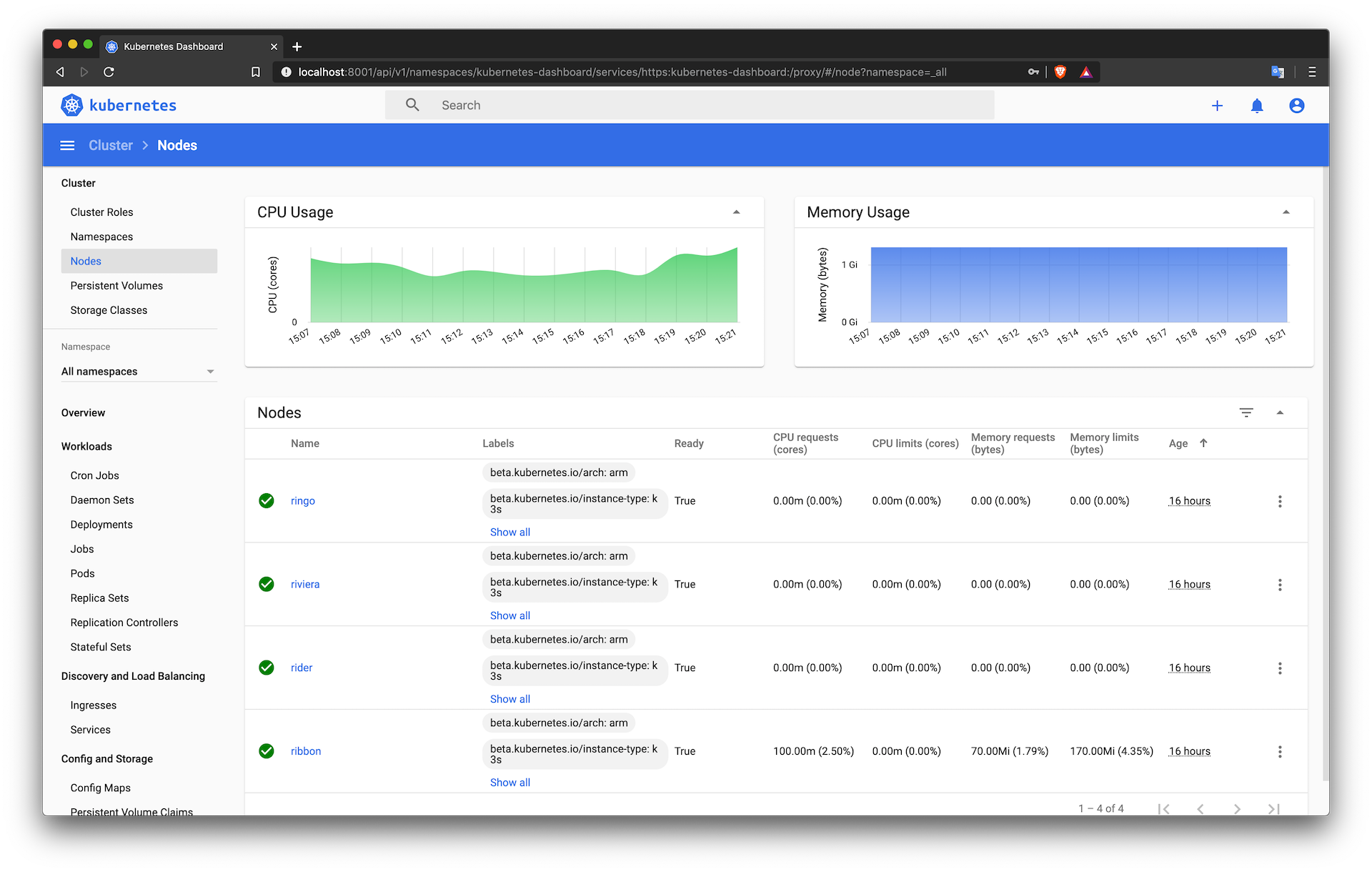

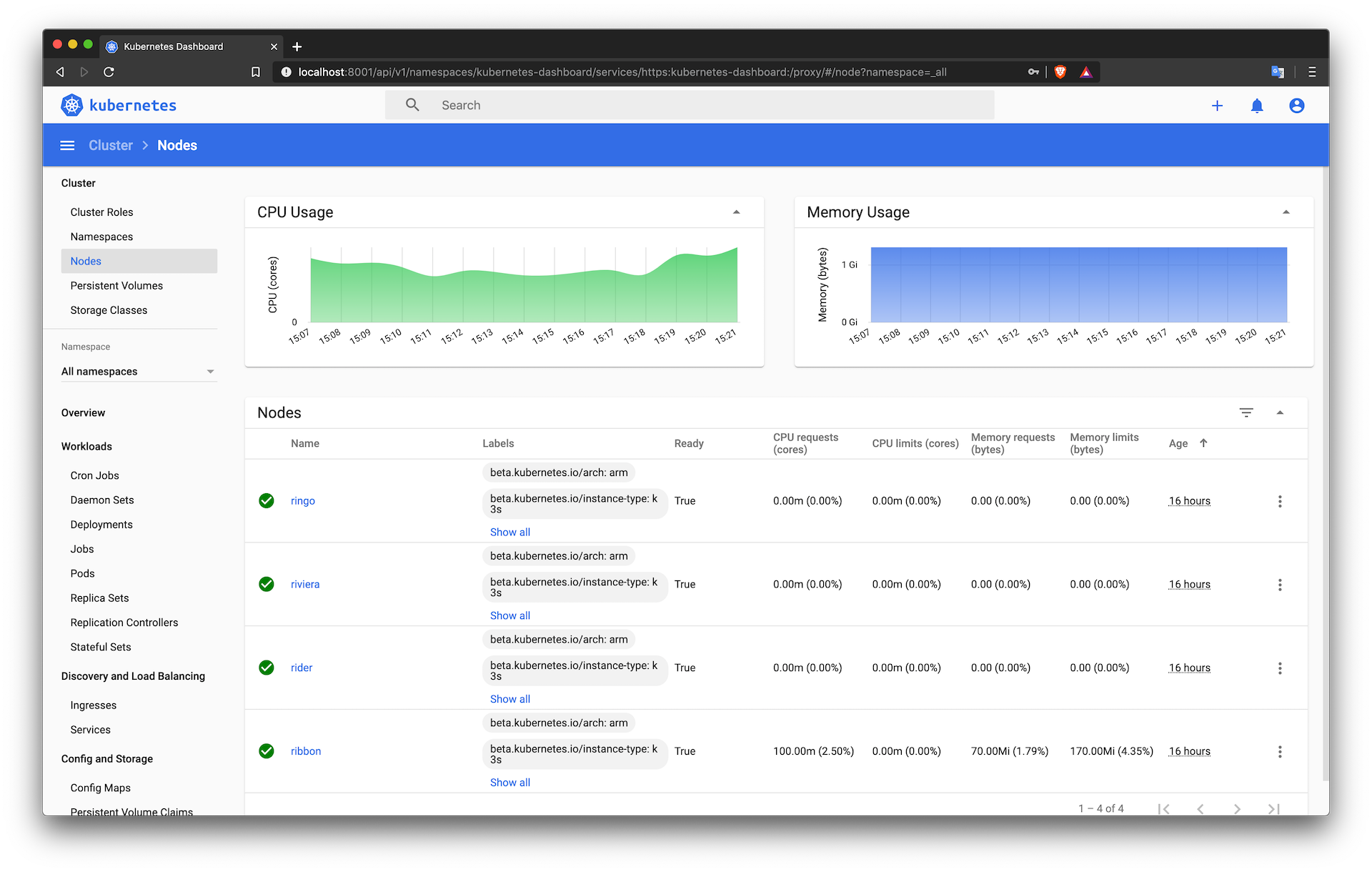

Nodes

~

✔ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ribbon Ready master 36m v1.16.3-k3s.2

rider Ready <none> 17m v1.16.3-k3s.2

ringo Ready <none> 12m v1.16.3-k3s.2

riviera Ready <none> 14m v1.16.3-k3s.2

~

✔ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

riviera Ready <none> 25m v1.16.3-k3s.2 192.168.1.34 <none> Raspbian GNU/Linux 10 (buster) 4.19.75-v7+ containerd://1.3.0-k3s.5

ribbon Ready master 47m v1.16.3-k3s.2 192.168.1.31 <none> Raspbian GNU/Linux 10 (buster) 4.19.75-v7l+ containerd://1.3.0-k3s.5

rider Ready <none> 27m v1.16.3-k3s.2 192.168.1.32 <none> Raspbian GNU/Linux 10 (buster) 4.19.75-v7+ containerd://1.3.0-k3s.5

ringo Ready <none> 23m v1.16.3-k3s.2 192.168.1.33 <none> Raspbian GNU/Linux 10 (buster) 4.19.75-v7+ containerd://1.3.0-k3s.5

~

✔ kubectl get node --selector='!node-role.kubernetes.io/master'

NAME STATUS ROLES AGE VERSION

rider Ready <none> 17m v1.16.3-k3s.2

ringo Ready <none> 13m v1.16.3-k3s.2

riviera Ready <none> 15m v1.16.3-k3s.2

~

✔ kubectl get node --selector='node-role.kubernetes.io/master'

NAME STATUS ROLES AGE VERSION

ribbon Ready master 37m v1.16.3-k3s.2

~

✔ JSONPATH='{range .items[*]}{@.metadata.name}:{range @.status.conditions[*]}{@.type}={@.status};{end}{end}' \

&& kubectl get nodes -o jsonpath="$JSONPATH" | grep "Ready=True"

ribbon:NetworkUnavailable=False;MemoryPressure=False;DiskPressure=False;PIDPressure=False;Ready=True;rider:NetworkUnavailable=False;MemoryPressure=False;DiskPressure=False;PIDPressure=False;Ready=True;ringo:NetworkUnavailable=False;MemoryPressure=False;DiskPressure=False;PIDPressure=False;Ready=True;riviera:NetworkUnavailable=False;MemoryPressure=False;DiskPressure=False;PIDPressure=False;Ready=True;

Events

~

✔ kubectl get events --sort-by=.metadata.creationTimestamp

LAST SEEN TYPE REASON OBJECT MESSAGE

39m Normal Starting node/ribbon Starting kubelet.

39m Warning InvalidDiskCapacity node/ribbon invalid capacity 0 on image filesystem

39m Normal NodeHasSufficientMemory node/ribbon Node ribbon status is now: NodeHasSufficientMemory

39m Normal NodeHasNoDiskPressure node/ribbon Node ribbon status is now: NodeHasNoDiskPressure

39m Normal NodeHasSufficientPID node/ribbon Node ribbon status is now: NodeHasSufficientPID

39m Normal Starting node/ribbon Starting kube-proxy.

39m Normal NodeAllocatableEnforced node/ribbon Updated Node Allocatable limit across pods

39m Normal RegisteredNode node/ribbon Node ribbon event: Registered Node ribbon in Controller

39m Normal NodeReady node/ribbon Node ribbon status is now: NodeReady

19m Normal Starting node/rider Starting kubelet.

19m Warning InvalidDiskCapacity node/rider invalid capacity 0 on image filesystem

19m Normal NodeHasSufficientMemory node/rider Node rider status is now: NodeHasSufficientMemory

19m Normal NodeHasNoDiskPressure node/rider Node rider status is now: NodeHasNoDiskPressure

19m Normal NodeHasSufficientPID node/rider Node rider status is now: NodeHasSufficientPID

19m Normal Starting node/rider Starting kube-proxy.

19m Normal NodeAllocatableEnforced node/rider Updated Node Allocatable limit across pods

19m Normal NodeReady node/rider Node rider status is now: NodeReady

19m Normal RegisteredNode node/rider Node rider event: Registered Node rider in Controller

17m Normal NodeReady node/riviera Node riviera status is now: NodeReady

17m Warning InvalidDiskCapacity node/riviera invalid capacity 0 on image filesystem

17m Normal NodeHasSufficientMemory node/riviera Node riviera status is now: NodeHasSufficientMemory

17m Normal NodeHasNoDiskPressure node/riviera Node riviera status is now: NodeHasNoDiskPressure

17m Normal NodeHasSufficientPID node/riviera Node riviera status is now: NodeHasSufficientPID

17m Normal NodeAllocatableEnforced node/riviera Updated Node Allocatable limit across pods

17m Normal Starting node/riviera Starting kubelet.

17m Normal Starting node/riviera Starting kube-proxy.

17m Normal RegisteredNode node/riviera Node riviera event: Registered Node riviera in Controller

15m Normal Starting node/ringo Starting kubelet.

15m Warning InvalidDiskCapacity node/ringo invalid capacity 0 on image filesystem

15m Normal NodeHasSufficientMemory node/ringo Node ringo status is now: NodeHasSufficientMemory

15m Normal NodeHasNoDiskPressure node/ringo Node ringo status is now: NodeHasNoDiskPressure

15m Normal NodeHasSufficientPID node/ringo Node ringo status is now: NodeHasSufficientPID

15m Normal NodeAllocatableEnforced node/ringo Updated Node Allocatable limit across pods

15m Normal NodeReady node/ringo Node ringo status is now: NodeReady

15m Normal RegisteredNode node/ringo Node ringo event: Registered Node ringo in Controller

15m Normal Starting node/ringo Starting kube-proxy.

Cluster

~

✔ kubectl cluster-info

Kubernetes master is running at https://ribbon.bierochs.org:6443

CoreDNS is running at https://ribbon.bierochs.org:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

Metrics-server is running at https://ribbon.bierochs.org:6443/api/v1/namespaces/kube-system/services/https:metrics-server:/proxy